Lei Feng network (search "Lei Feng network public attention") : Deng Qili, author of this article, Harbin Institute of Technology, Shenzhen Graduate School of Computer Science master's second grade, tutor "Pengcheng scholar" Professor Xu Yong. Research interests are deep learning and computer vision. He won the second prize of the first Alibaba Large-scale Image Search Contest in 2015, ranking third overall.

Summary

In recent years, deep learning has made major breakthroughs in various computer vision tasks. One of the important factors is its powerful non-linear representation ability and ability to understand deeper levels of information in images. This article summarizes and summarizes the visual example search method based on deep learning, hoping to give readers inspiration.

Foreword

Given a query image that contains an object, the task of the visual instance search is to find from the candidate image library those pictures that contain the same object as the query image. Compared with the general image search, the search conditions for the instance search are more rigorous - whether it contains the same objects, such as the same clothes, the same car, and so on. This problem has a very wide range of application prospects, such as product search, vehicle search, and image-based geographic location identification. For example, mobile product image search is to find the same or similar products from the product library by analyzing the product photos taken with the mobile phone camera.

However, in actual scenes, due to interference factors such as pose, light, and background, two images containing the same object often differ greatly in appearance. From this point of view, the essential problem of visual instance search is what image features should be learned so that images containing the same object are similar in feature space.

In recent years, deep learning has made major breakthroughs in various computer vision tasks, including visual search tasks. This paper mainly analyzes and summarizes the deep learning based instance search algorithm (hereinafter referred to as "depth instance search algorithm"). The article is divided into four parts: The first part summarizes the general flow of the classic visual instance search algorithm; The third part introduces the main depth of case search algorithms in recent years from two aspects; the end-to-end feature learning method and feature coding method based on CNN features; the fourth part will summarize the first Alibaba large-scale image in 2015. The related methods appearing in Alibaba Large-scale Image Search Challenge (ALISC) introduce some techniques and methods that can improve the performance of instance search.

Before deep learning is popular, typical example search algorithms are generally divided into three phases: firstly extracting locally invariant features densely in a given image, and then further coding these locally invariant features into a compact image representation. The similarity calculation (based on the image representation obtained in the second step) is performed on the query image and the image in the candidate image library to find those images that belong to the same instance.

1. Partially invariant features. The feature of local invariant feature is to extract the detail information of the local area of ​​the image, do not care about the global information, and have certain invariance to the light changes and geometric transformations in the local area. This is very meaningful for instance search because the target object can appear in any area of ​​the image with a geometric transformation. In early work, many instance search methods used SIFT features.

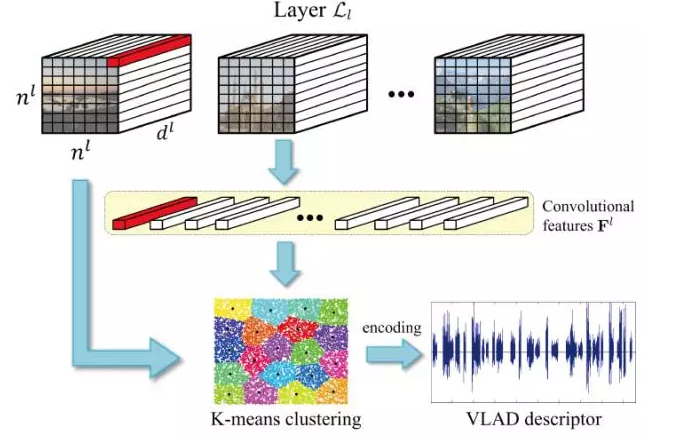

2. Feature encoding. There are two aspects to further coding the local features: mining the relevant information between these local features to enhance the discriminative ability; a single compact feature vector is easier to implement indexing and improve the search speed. At present, common methods include VLAD (vector of locally aggregated descriptors), Fisher Vectors, and triangular embedding. Here, this article briefly introduces the VLAD method (which appears multiple times later in this article): a) The VLAD method first uses k-means to obtain a codebook containing k centers, and then each local feature is assigned to the center closest to it. Points (we call this step a hard-assignment, which will be improved later in the related articles), and finally sum the residuals between these local features and their assigned center points as the final image representation. As can be seen from the above, the VLAD method has an unordered nature - it does not care about the spatial location of the local features, so it can further decouple the global spatial information and has a good robustness to the geometric transformation.

3. Similarity calculations. A straightforward approach is to calculate distances between features based on distance functions, such as Euclidean distances, cosine distances, and so on. The other is to learn the corresponding distance functions, such as LMNN, ITML and other metric learning methods.

Summary: The performance of classic visual instance search algorithms is often limited by the ability to represent hand-crafted features. When deep learning is applied to an instance search task, the main point is to start with the feature representation, that is, how to extract more discriminative image features.

NetVLAD: CNN architecture for weakly supervised place recognition (CVPR 2016)

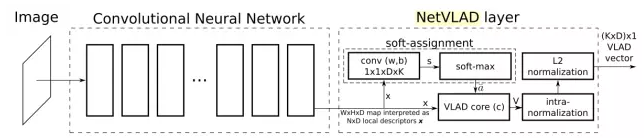

This article is the work of Relja Arandjelović et al. from INRIA. This article focuses on one specific application of instance search - location recognition. In the location identification problem, given a query image, a large location tag data set is queried, and then the location of the query picture is estimated using the positions of those similar pictures. The author first used the Google Street View Time Machine to establish a large-scale location tag data set, and then proposed a convolutional neural network architecture. NetVLAD - embeds the VLAD method into the CNN network and implements "end-to-end" Learning. The method is shown below:

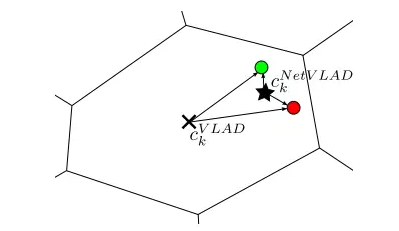

The hard-assignment operation in the original VLAD method is not divisible (by assigning each local feature to its nearest center point), so it cannot be directly embedded in the CNN network and participate in error back propagation. The solution to this article is to use a softmax function to convert this hard-assignment operation to a soft-assignment operation—using the 1x1 convolution and softmax functions to find the probability/weight of the local feature that belongs to each center point, and then assign it to The center point with the greatest probability/weight. So NetVLAD contains three parameters that can be learned, where is the parameter of the above 1x1 convolution, used to predict soft-assignment, expressed as the center point of each cluster. And complete the corresponding cumulative residual operation in the VLAD core layer in the above figure. The author shows us the advantages of NetVLAD over the original VLAD through the following diagram: (More flexibility - learn better cluster center points)

Another improvement of this article is Weakly supervised triplet ranking loss. In order to solve the problem that the training data may contain noise, the method replaces the positive and negative samples in the triplet loss with a positive positive sample set (at least one positive sample, but not sure which one) and an explicit negative sample set. And during training, the feature distance between the constrained query picture and the most likely positive sample in the positive sample set is smaller than the feature distance between the query picture and the pictures in all negative sample sets.

Deep Relative Distance Learning: Tell the Difference Between Similar Vehicles (CVPR 2016)

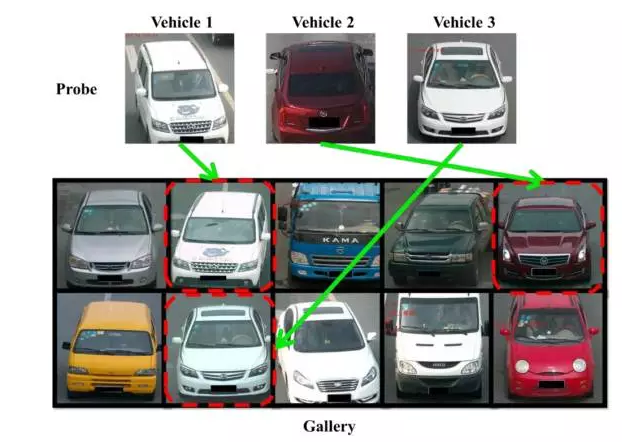

The next article focuses on vehicle identification/search issues from the work of Hongye Liu and others at Peking University. As shown in the figure below, this problem can also be seen as an instance search task.

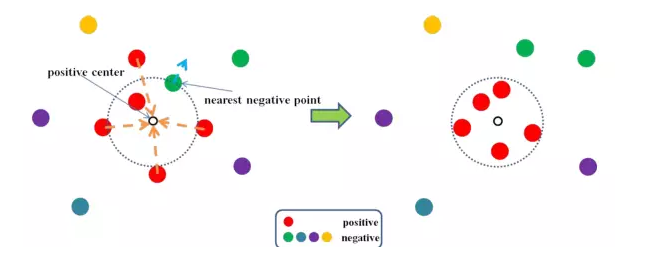

Like many supervised deep-instance search methods, this article aims to map the original image into an Euclidean feature space and make the images of the same vehicle more clustered in the space, while the non-homogeneous vehicle images are more keep away. In order to achieve this effect, a common method is to train the CNN network by optimizing the triplet ranking loss. However, the author found some problems with the original triplet ranking loss, as shown in the following figure:

For the same sample, the left triple will be adjusted by the loss function and the right triple will be ignored. The difference between the two is that the choice of anchor is not the same, which leads to instability during training. To overcome this problem, the author used coupled clusters loss (CCL) instead of triplet tracking loss. The loss function is characterized by the fact that the triple becomes a positive and negative set of samples, and the samples in the positive sample are clustered with each other, and the samples in the negative set are more distant from those positive samples, thus avoiding Randomly select the negative effects of the anchor sample. The specific effect of this loss function is shown in the figure below:

Finally, this article focuses on the particularities of vehicle problems and combines the above-designed coupled clusters loss to design a hybrid network architecture and build a relevant vehicle database to provide the required training samples.

DeepFashion: Powering Robust Clothes Recognition and Retrieval with Rich Annotations (CVPR 2016)

The last article was also published in CVPR 2016. It introduced clothing identification and search. It is also a task related to case search. It comes from the work of Ziwei Liu and others of the Chinese University of Hong Kong. First, this article describes a database called DeepFashion. The database contains more than 800K clothing images, 50 fine-grained categories and 1000 attributes, and additionally provides key points for clothes and cross-pose/cross-domain pair correspondences. Some specific examples are shown below:

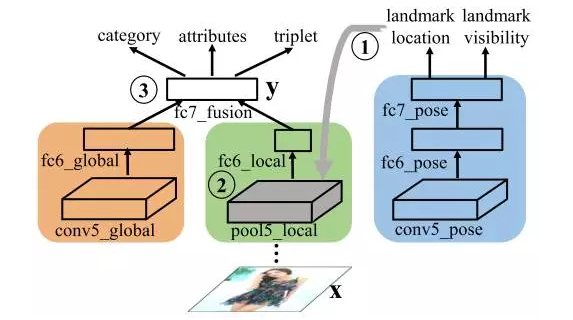

Then, in order to illustrate the effect of the database, the author proposes a novel deep learning network, FashionNet - through the joint prediction of key points and attributes of clothes, learning to obtain more distinguishing features. The overall framework of the network is as follows:

FashionNet's forward calculation process is divided into three phases: In the first phase, a picture of a garment is entered into the blue branch of the network to predict whether the key point of the garment is visible or positional. In the second stage, according to the position of the key points predicted in the previous step, the landmark pooling layer obtains the local features of the clothes. In the third stage, the global features of the "fc6 global" layer and the local features of "fc6 local" are stitched together to form "fc7_fusion" as the final image feature. FashionNet introduces four loss functions and uses an iterative training approach to optimize. These losses are: the regression loss corresponds to the keypoint location, the softmax loss corresponds to whether the keypoint is visible and the clothing category, the cross-entropy loss function corresponds to the property prediction and the triplet loss function corresponds to similarity learning between clothes. The author compares FashionNet with other methods from the three aspects of clothing classification, attribute prediction and clothing search, and has achieved significantly better results.

Summary: When there are enough labeled data, deep learning can learn image features and measurement functions at the same time. The idea behind it is that according to a given metric function, the learning feature makes the feature have the best discriminability in the metric space. Therefore, the main research direction of the end-to-end feature learning method is how to construct a better feature representation and loss function form.

Feature Coding Method Based on CNN FeatureThe depth example search algorithm introduced in the above section focuses on the data-driven end-to-end feature learning method and the corresponding image search data set. Next, this article focuses on another issue: how to extract effective image features when these related search data sets are absent. In order to overcome the insufficiency of domain data, a feasible strategy is to extract the feature map of a certain layer from the CNN pre-training model (the CNN model trained on other task data sets, such as the ImageNet image classification data set) ( Feature map), which is encoded to get image features that are suitable for instance search tasks. This section will introduce some major methods based on relevant papers in recent years (in particular, all the CNN models in this section are pre-trained models based on the ImageNet classification data set).

Multi-Scale Orderless Pooling of Deep Convolutional Activation Features (ECCV 2014)

This article was published in ECVC 2014. It was from the work of the University of North Carolina at Chapel Hill and the University of Illinois at Champaigne Liwei Wang. Because global CNN features lack geometric invariance, the classification and matching of variable scenes are limited. The author attributed this problem to the fact that the global CNN feature contains too much spatial information, so he proposed multi-scale orderless pooling (MOP-CNN)—combining CNN features with unordered VLAD coding methods.

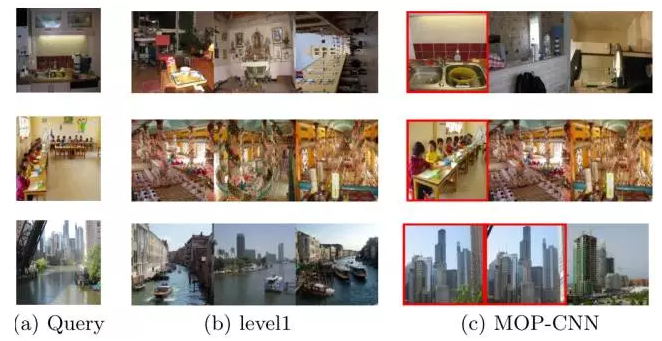

The main steps of MOP-CNN are: firstly consider the CNN network as a "local feature" extractor, then extract the "local features" of the image on multiple scales, and use VLAD to encode the "local features" of each scale as The image features on this scale, and finally connect the image features of all scales together to form the final image features. The framework for extracting features is as follows:

The authors performed tests on the two tasks of classification and instance search. As shown in the following figure, it is proved that MOP-CNN has better classification and search results than general CNN global features.

Exploiting Local Features from Deep Networks for Image Retrieval (CVPR 2015 workshop)

This article was published in CVPR 2015 workshop. It was from the work of Joe Yue-Hei Ng et al. Many recent studies have shown that the convolutional feature map is more suitable for instance search than the output of fully connected layers. This article describes how to convert the feature map of a convolutional layer into "local features" and use VLAD to encode them as image features. In addition, the author also conducted a series of related experiments to observe the effect of different convolution layer feature maps on the accuracy of the case search.

Aggregating Deep Convolutional Features for Image Retrieval (ICCV 2015)

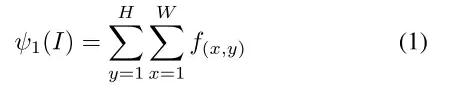

The next article, published in ICCV 2015, is from the work of Artem Babenko, Moscow Institute of Physics and Technology, and Victor Lempitsky, Skolkovo Institute of Technology. From the above two articles, it can be seen that many deep-instance search methods use an unordered encoding method. However, these encoding methods, including VLAD and Fisher Vector, are usually computationally expensive. In order to overcome this problem, this article designs a simpler and more efficient coding method called Sum pooing. The specific definition of Sum pooling is as follows:

Among them is the local feature of the convolutional layer in space (the method for extracting local features here is consistent with the previous article). After using sum pooling, PCA and L2 are further normalized to global features to get the final features. The author compares these methods with Fisher Vector, Triangulation embedding, and max pooling, and demonstrates that the sum pooling method is not only computationally simple but also better.

Where to Focus: Query Adaptive Matching for Instance Retrieval Using Convolutional Feature Maps (arXiv 1606.6811)

This last article is currently posted on arXiv and is from the work of Jiewei Cao and others at the University of Queensland in Australia. As mentioned at the beginning of this article, cluttered backgrounds have a great impact on instance search. To overcome this problem, based on the sum-pooling method proposed in the previous article, this article proposes a method called query adaptive matching (QAM) to calculate the similarity between images. The core of the method is to perform pooling operations on multiple areas of the image and create multiple features to express the image. Then at the time of matching, the query image will be compared with the features of these regions respectively, and the best match score will be taken as the similarity between the two images. The next question is how to build these areas.

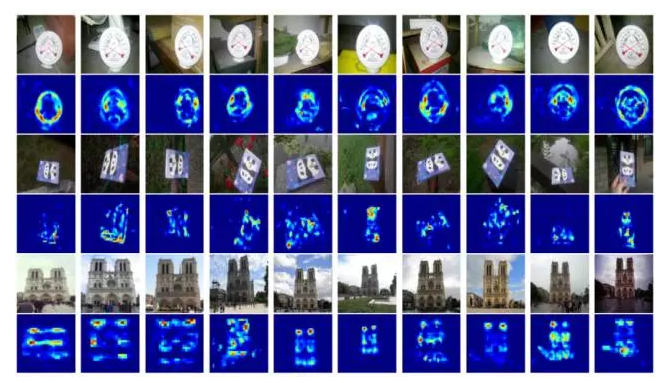

The author first proposed two methods—Feature Map Pooling and Overlapped Spatial Pyramid Pooling (OSPP) to get the base region of the image. Then, by consolidating these base regions continuously to find the best similarity score, the target area is constructed. The most appealing aspect is that the author converts the entire merger process into a solution to an optimization problem. The following figure shows the partial results of the QAM method and the feature map of the corresponding image.

Summary: In some instances of search tasks, due to the lack of sufficient training samples, you cannot directly “end-to-end†to learn image features. At this time, how to code off-the-shelf CNN features as image representation suitable for instance search has become a hot research direction in this field.

The First Alibaba 2015 Large-scale Image Search Contest

After introducing some of the major deep instance search methods in recent years, in the following sections, this article will summarize some relevant practices that appear in the Alibaba’s large-scale image search contest to introduce some practices that can improve visual search performance. Tips and methods.

Alibaba's large-scale image search contest was hosted by Ali's image search group and asked the teams to find out from the vast library of pictures the pictures that contain the same objects as the query pictures. This competition provides the following two types of data for training: training set of about 200W pictures (category-level tags and corresponding attributes), 1417 verification query pictures and corresponding search results (about 10W in total). In the test, given 3567 query images, the participating teams need to search for those images that meet the requirements from the evaluation set of about 300W images (no label), and the evaluation index is a top AP-based mAP (mean Average Precision).

First of all, we briefly introduce our method - Multi-level Image Representation for Instance Retrieval. This method achieved the third place in this competition. Many methods use the characteristics of the last convolutional layer or full-connection layer to perform the search. However, due to the high-level features that have lost a lot of detailed information (for deeper networks, the loss is more serious), the instance search is not very accurate, as follows The figure shows that the overall profile is similar, but the details are very different.

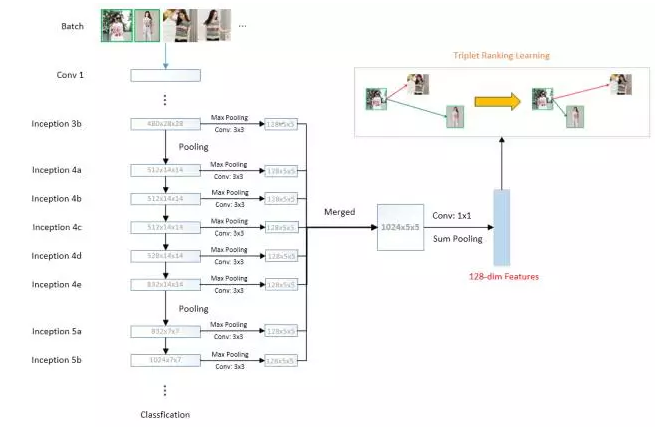

To overcome this problem, we fuse the feature maps of different layers in the CNN network. This not only uses the semantic information of the high-level features, but also considers the detailed texture information of the low-level features, making the instance search more accurate. As shown in the figure below, our experiment is mainly based on the GoogLeNet-22 network. For the final 8-layer feature map (from Inception 3b to Inception 5b), we first sub-sampled these different scale feature maps using maximum pooling (conversion to Same-size feature maps, and the convolution used is further processed for these sampled results. These feature maps are then linearly weighted (by the convolution), and on this basis, the final image features are obtained using sum pooling. During training, we learned these features end-to-end by optimizing the triplet ranking loss based on the cosine distance based on the training data provided. Therefore, when testing, the cosine distance between features can be directly used to measure the similarity of the image.

In addition, using the idea of ​​hard-to-separate sample mining to learn from training SVM classifiers, our method first calculates the loss of all potential triplets in the current training batch (in the case of forward calculations). A picture of the same category and a picture of a different category constitute a potential triad), then find those "difficult" triples (greater losses), and finally use these "difficulty" in the reverse calculation The tuple carries out error propagation to obtain better training results.

Then briefly summarize the relevant methods of other teams. In the end-to-end feature learning method, in addition to the triplet ranking loss, the contrast loss (corresponding to the Siamese network) is also a common loss function. In addition, there are some ways we can focus on, which can significantly improve search performance:

(I) Mining with the same figure

In supervised machine learning methods, more data may mean higher accuracy. Therefore, the team from the Institute of Computing Technology of the Chinese Academy of Sciences proposes to cluster on the class-level training set according to the characteristics of the ImageNet pre-training model, and then use the threshold to mine more of the same chart, and finally use these same chart to train. CNN network, learning image features. This method is simple to implement and can significantly improve the performance of the search.

(b) Target detection

In case retrieval, complex background noise directly affects the final search performance. So many teams first try to use target detection (such as faster-rcnn) to locate the area of ​​interest, and then learn the features further and compare similarities. In addition, weak supervision of target positioning is also an effective method when there is no bounding box training data.

(iii) Fusion of first-order pooling features and second-order pooling features

Second-order pooling methods can often achieve better search accuracy by capturing second-order statistical variables, such as covariance. The team led by Professor Li Peihua from Dalian Polytechnic University merged the first-order pooling characteristics with the second-order pooling features on the basis of the CNN network and achieved very good results.

(iv) Joint feature learning and attribute prediction

This method is similar to the DeepFashion mentioned in the third section of this article. It also learns the characteristics of the feature and the predicted picture (multitasking training), resulting in more distinguishing features.

Lei Fengwang Note: This article was authorized by the Lecture Center of Deep Learning to be published by Lei Feng. Reproduced please indicate the author and source, and must not delete the content.

Jinhu Weibao Trading Co., Ltd , https://www.weibaoe-cigarette.com