Foreword If the network is ideal, that is, no packet loss, no jitter, low latency, then receiving a complete frame of data will play directly, the effect will be very good. But the actual network is often complicated, especially for wireless networks. If you play it directly, the network will be slightly worse, the video will be stuck, and anomalies such as mosaics will appear. Therefore, it is necessary to buffer the received data at the receiving end. Buffering must be based on the delay. The greater the delay, the better the filtering effect on jitter. An excellent video jitterbuffer not only has to deal with abnormal situations such as packet loss, out-of-order, and delayed arrival, but also enables the video to be played smoothly, avoiding obvious accelerated playback and slow playback as much as possible. The mainstream real-time audio and video framework will basically implement the jitterbuffer function, such as WebRTC, doubango and so on. WebRTC's jitterbuffer is quite good. According to the function classification, it can be divided into jitter and buffer. The buffer mainly handles exceptions such as packet loss, out-of-order, and delayed arrival, and also cooperates with QOS such as NACK, FEC, and FIR. Jitter mainly evaluates the jitter delay according to the size and delay of the current frame, and combines the decode delay, the render delay, and the audio and video synchronization delay to obtain the render TIme to control the smooth rendered video frame. The following will introduce the jitter and buffer separately. Buffer

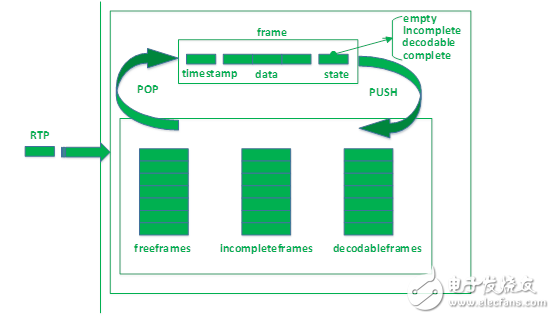

Buffer running mechanism diagram buffer The main processing operations of the received rtp packet are as follows:

The first time a video packet is received, an empty frame block is popped from the freeframes queue to place the packet.

After that, each time an RTP packet is received, it is searched in incompleteframes and decodableframes according to the timestamp to see if the packet with the same timestamp has been received. If found, the frame block is popped up. Otherwise, an empty frame is popped from the freeframes.

Find the location where the frame should be inserted based on the package's serial number and update the state. Where state has empty, incomplete, decodable, and complete, empty is the state without data, incomplete is the state of at least one packet, decodable is the decodable state, and complete is all data of this frame has been reached. Decodable will have different rules according to decode_error_mode. Different strategies of QOS will set different decode_error_mode, including kNoErrors, kSelecTIveErrors and kWithErrors. Decode_error_mode determines whether the frame fetched by the decoding thread from the buffer contains an error, that is, whether the current frame has a packet loss.

Push the frame frame back to the queue according to different states. When state is incomplete, push to incompleteframes queue, decodable and complete state of the frame, push back to the decodableframes queue.

The freeframes queue has an initial size. When the freeframes queue is empty, the queue size is increased, but there is a maximum. Regularly remove some outdated frames from the incompleteframes and decodable queues and push them to the freeframes queue.

The decoding thread fetches the frame, and after the decoding is completed, pushes back to the freeframes queue.

The jitterbuffer is closely related to the QOS policy. For example, after the incompleteframes and the decodable queue clear some frames, the FIR (keyframe request) is required, and the NACK (return packet retransmission) is detected after the packet loss is detected according to the packet sequence number. Jitter's so-called jitter is a kind of jitter. How to explain it specifically? From the source address to the destination address, a different delay occurs, and the delay change is the jitter. What impact does jitter have? Jitter will make the audio and video play unsteady, such as the vibrato of the audio, and the video will suddenly and slowly. So how do you fight against the jitter? Increase the delay. Need to add a delay due to the jitter, namely jitterdelay.

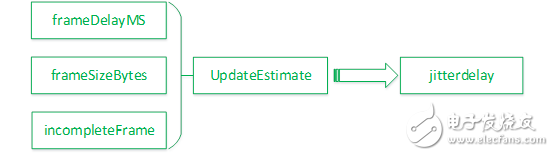

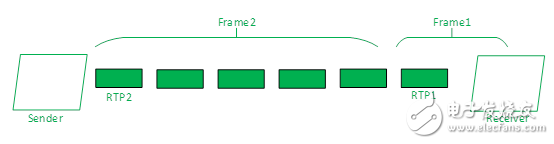

Update the jitterdelay graph where frameDelayMS refers to the delay sum and interframe delay caused by one frame of data due to packet and network transmission. Specifically, the following figure shows the time difference between RTP1 and RTP2 reaching Receiver.

The interframe delay graph framesizeBytes refers to the current frame data size, incompleteFrame refers to whether it is a complete frame, and UpdateEsTImate is a module that updates the jitterdelay according to these three parameters. This module is a core module, which uses Kalman filter to perform interframe delay. Filtering. JitterDelay =theta[0] * (MaxFS – AvgFS) + [noiseStdDevs * sqrt(varNoise) –noiseStdDevOffset] where theta[0] is the reciprocal of the channel transmission rate, MaxFS is the maximum frame size received since the start of the session, AvgFS Indicates the average frame size. noiseStdDevs represents a noise figure of 2.33, varNoise represents the noise variance, and noiseStdDevOffset is the noise subtraction constant of 30. UpdateEsTImate will continuously update varNoise and so on. After getting the jitterdelay, through jitterdelay+denedelay+renderdelay, ensure that the delay is greater than the audio and video synchronization, plus the current system time to get the rendertime, so you can control the playback time. Controlling playback also indirectly controls the size of the buffer.

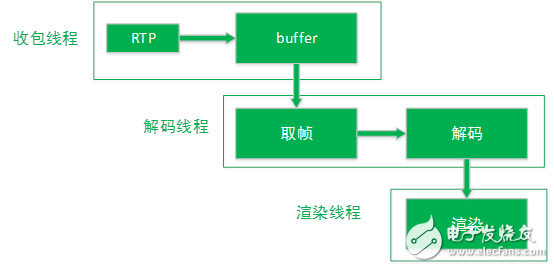

Take frame, decode and play

Take the frame decoding playback picture This article only introduces the jitterbuffer related content, so only the step of taking the frame is described here. The decoding thread will always look for the expected data from the buffer. The expected data here is divided into complete and incomplete. If the expected data is complete, then the frame with the status complete is taken from the decodableframes queue. If the expected data can be incomplete, the data is fetched from the decodableframes and incompleteframes queues. Before fetching data, always go to find the timestamp of the data, then calculate the jitterdelay and rendering time, and then after a period of delay (this delay is the rendering time minus the current time, decodedelay and render delay) Data, passed to decoding, rendering. When taking a complete frame, there is a maximum waiting time, that is, there is no complete frame in the current buffer, then you can wait for a period of time, in the hope that a complete frame can appear during this time. Postscript From the above principle, it can be seen that the receiving buffer in WebRTC is not fixed, but changes according to factors such as network fluctuations. Jitter is designed to combat jitter caused by network fluctuations, enabling video to play smoothly. So, is there a space that can be optimized for the jitterbuffer? The jitterbuffer is already excellent, but we can adjust the quality of the video to make the video quality better. For example, increase the buffer, because the jitterbuffer is dynamic, directly increase the size of the freeframes is invalid, you can only increase the buffer by adjusting the delay. As another example, adjust the wait time to expect more complete frames. Another example is to cooperate with NACK, FIR, FEC and other QOS strategies to combat packet loss. Of course, this is at the expense of delay. In short, we must balance the delay and packet loss and jitter.

15v wall charger,15 Watt Power Supply,15v ac dc adapter,AC Wall Charger Power Adapter,AC/DC Charger Power Supply Switching Adapter,DC 15V Global AC / DC Adapter,15VDC Power Supply Cord Cable Wall Charger,15VDC800 Charger PSU

Shenzhen Waweis Technology Co., Ltd. , https://www.huaweishiadapter.com