iPhoneX's face recognition technology has set off a wave of brushing faces. When we are immersed in the excitement of being able to brush our face, have we ever thought that face recognition technology is really safe for us, and whether our data can be guaranteed to the highest level.

What kind of data security thinking does iPhoneX face recognition cause?

At this year's Apple conference, the iPhone X's "former bangs" became the focus of the audience, and its new features such as face recognition, double-shot, and augmented reality became the biggest selling points. iPhone X claims to "defining the future of smart phone form", leading the next 10 years to roll the iPhone 8/iPhone 8Plus, paying tribute to the smart phone godfather Steve Jobs.

The face recognition function Face ID is undoubtedly the killer of iPhone X. The 9 features of Face ID at the conference were introduced: face verification, original deep shot, simple registration, specialized neural network, security and natural, user privacy, attention Power-aware, adaptive, and Apple Pay and other applications work together. Cook emphasizes the security of Face ID. If one thousandth of the original Touch ID is likely to be cracked, the probability that Face ID is opened by others may be only one in a million.

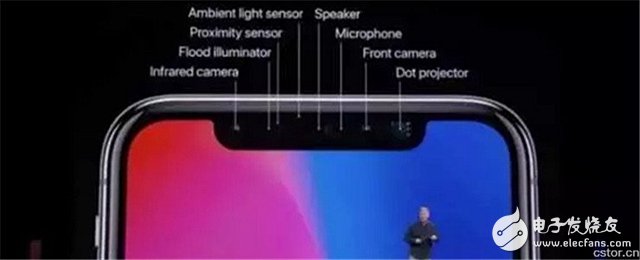

This is also due to the hidden mystery of “Before Liu Haiâ€: 4 of the 8 accessories are involved in face recognition (infrared camera, floodlight, ambient light sensor and dot matrix projector) – with 30,000 sampling points Benchmark, the front-end device performs face image acquisition and processing, and establishes user face 3D data. After each unlocking, the latest data is compared with the previously collected data and transmitted to the neural network module Neural Engine in the A11 chip for processing.

Face ID technology bursts into the eye, not only for the new generation of iPhone to make eyeballs, but also brings ample topic traffic. But at the same time, it has once again triggered people's concerns about personal privacy and information security. We feel the slogan of the netizens:

A treasure also followed the wind on the artifact known as anti-face recognition unlock:

The netizen's ridicule also revealed people's concerns about the information security risks behind the technology of face recognition and unlocking mobile phones. Al-Franken, a Democratic senator from Minnesota, said that Apple may use the new unlocking system, Face ID, to collect so-called facial data from users and then leak the data. Just one day after the release of the iPhoneX, Franken asked Apple to disclose in detail the privacy and security measures taken in the use of biometric data.

On the eve of the Apple conference, Stanford University Assistant Professor Michal Kosinski and his student Wang Yilun published a paper entitled "Deep Neural Networks Based on Face Image Judgment Orientation More Accurate than Humans", using VGG-Face Deep neural network models, which extract facial features such as face, mouth, nose, eyebrow, and facial hair and quantify them, thereby training the classifier to distinguish different sexual orientations. When the news came out, the public opinion was in vain. Today, gay groups still suffer from discrimination or prejudice. People have questioned that this research will seriously violate personal privacy. The reach of technology is moving out of the public domain and extending into personal space to test the bottom line of social or legal tolerance.

We combined the Qingbo big data public opinion monitoring system with “iPhone X†and “privacy†as the monitoring phrases for data analysis. The results show that among the emotional attributes expressed by netizens, negative emotions accounted for 12.43%, and neutral emotions accounted for 29.5%. A considerable proportion of people are not optimistic about the potential personal information security risks of iPhone X.

Playing face recognition is more than iPhone XCurrently, it is not just iPhone X that plays face recognition. Before the iPhone X was introduced, Samsung's Note 8 and Xiaomi's Note 3 were all unlocked using face recognition technology. However, the latter's face image capture stayed in the 2D camera stage, and the jokes that the user unlocked with the owner's photo were also made, and the face recognition caused a bad review.

The application of face recognition technology is not a patent for smartphones. In the access control system, camera monitoring system, payment system and other functional devices, face recognition is like a human brain center input screening, there is much to do. We are welcoming the era of “brushing the faceâ€. The society is becoming more and more information-oriented and digital, and face recognition has penetrated into many life scenes such as the public, food, shelter, and transportation, and has been attacked in the market.

On August 25th, Wuhan Railway Station fully announced the face-lifting; on September 1st, Alipay announced a commercial brush face payment; on September 5th, a large number of hotels in Hangzhou could face the face; on September 6, HSBC announced the use of people Face recognition technology; on September 7th, Jingdong and Suning opened the face payment; on September 11, Beijing Normal University announced that the student dormitory was fully activated to “open the doorâ€... More and more application scenarios introduced face recognition technology .

Face recognition selling personal informationCook claims that Face ID is 20 times more secure than Touch ID, which comes from: iphone X uses infrared structured light/structured light dual-shot, equipped with 3D structured light depth lens. The mobile phone actively emits a specific infrared structure light to illuminate the detected object, thereby acquiring 3D image data of the portrait. The 3D portrait unlock with structured light resists all plane attacks. In addition, it is said that the Apple Cloud does not collect 3D data of the user's face, and the security is also improved.

Despite this, the data shows that the current state of global information security is not optimistic. According to the 2016 Data Diffusion Level Index (Breach Level Index 2016) report, global data privacy disclosures in the first half of 2016 alone reached more than 900 times, and the types of disclosure and default. Theft, financial theft, and account acquisition accounted for 64%, 16%, and 11%, respectively; on the channels of default, external stealing, accidental loss, and ghost stealing accounted for 69%, 18%, and 9%, respectively. The leakage of data privacy and the variety of defaults, the external stealing accounted for a large proportion, both exposed the current data security risks.

In contrast, in China, the Xu Yuyu case became a national news in 2016 and became a typical example of telecom fraud. According to data from the Ministry of Public Security, in the past 10 years, telecom fraud cases have grown at a rate of 20% to 30% per year, only in 2016. From July to July, there were 355,000 cases of telecommunications fraud in China, up 36.4% year-on-year. And every day, receiving various sales and winning calls, has become commonplace for the public, and who can be sure that they will not be new victims. Therefore, concerns about information security issues are not groundless.

Including the smart advertisements that are being implemented in all platforms, Internet companies turn various private information left by users on applications such as WeChat into data assets. Through intelligent means, commercial companies analyze users' age, interests, personality and consumption. Force and so on, and then customize and personalize services for users. For example, different users will see advertisements of different products such as “BMW†or “Coke†in the circle of friends. The emergence of such smart advertisements has triggered discussions on consumer discrimination and information squatting.

Find the greatest common divisor between technology development and data securityIn fact, concerns and governance of information security issues have long appeared. In 1983, German legislation established the right to self-determination of personal data, and in 2008 expanded the content of this right to protect the privacy and integrity of personal data and the individual's discretion to disclose and use personal data. In 2014, Victor Meyer Schonberg, author of Big Data Times, explicitly proposed the concept of “digital forgotten rightsâ€, specifically referring to the right of data subjects to require data controllers to delete their personal information to prevent further dissemination. .

In addition to the deepening of the concepts and theories related to information security, people's explorations at various levels, such as the legal system, judicial practice, and technology, have also been ongoing.

At the level of the legal system, in 2016, the European Union promulgated the General Regulations on Data Protection, which will take effect next year. Article 17 of the “right to be forgotten†specifically states that when personal data is not related to the purpose of collection and processing, the data subject does not want its data. When the processed or data controller has no valid reason to save the data, the data subject may request that the company or individual collecting the data delete its personal data at any time. The Act expands and enhances the content of personal information definition and data protection.

At the judicial practice level, Internet companies have been asked to assume the responsibility of eliminating data liability. Google has included the content of the Spanish Mario being forced to auction the property due to debt. In 2010, Mario asked Google Spain to remove the link and was rejected, but Mario insisted on this and made an effort. In 2014, Mario was brought to court by Google along with the data protection agency AEPD (Agencia Espa&nTIlde; ola de Protección de Datos, affiliated with the Spanish government) supporting Mario. In the end, the European Court of Justice invoked the EU Charter of Fundamental Rights to rule on Google’s loss of respect for private life and the protection of personal data. The processing mechanism for European citizens to apply for the elimination of personal data has also been established.

On the technical level, when some people argue about the personal information security and social ethics caused by technology, there have been technical action schools to study the "anti-face recognition" technology, trying to suppress technology with technology. Grigory Bakunov, technical director of Yandex, Russia, has said that he is fed up with the feeling of being monitored. He and several hackers teamed up to develop an anti-face recognition algorithm that prevents face recognition software from successfully identifying a person. As long as the special makeup technology is used, the identification of the software can be avoided. ISAO ECHIZEN, a professor at the National Institute of Information Research in Tokyo, Japan, has also developed the world's first anti-face recognition glasses. The 11 sets of near-infrared ring lights on the glasses affect face recognition technology, which prevents the camera's facial recognition program from capturing information.

It should be noted that the emphasis on data security and actions cannot be overkilld and ruined. Data privacy is a double-edged sword that raises our anxiety on the one hand and gives us many opportunities on the other. Proper data protection can bring great value, such as reduced transaction costs, personalized services, etc. The key to visible problems is "proper protection," which is the degree of control.

In addition, regarding privacy, there are many sets of paradoxes in reality: the emphasis on privacy in terms of concept and the neglect of privacy in behavior, the protection of data in public, the leakage of data in private and even black market transactions, the analysis of public opinion and the freedom of thought. Freedom of expression, privacy protection and public safety of data mining services, etc.

Obviously, accurately grasping the degree of protection of data privacy is a major proposition beyond a single discipline. The greatest common denominator between the development of technology and data security is bound to undergo some entanglement. More importantly, solving this public problem requires gathering experts from all sides to promote interdisciplinary communication. Perhaps, with the participation of technology, politics, economy, law and other parties, giving full play to the advantages of various disciplines, it is expected to explore the balance between technological development and data security.

Guii Labs Vape,Fume Extra 1500 Puffs ,Disposable Vapor Stick ,Vape Pen Pop

tsvape , https://www.tsvaping.com