The current deep learning is mainly based on big data, that is, training big data, and summarizing the knowledge or laws that can be used by computers on similar data. So, what is big data?

People often say that big data is large-scale data.

This statement is not accurate. “Large scale†refers only to the amount of data. The large amount of data does not mean that the data must have value that can be utilized by deep learning algorithms. For example, in the process of the earth orbiting the sun, the speed and position of the earth relative to the sun are recorded every second, and a large amount of data can be obtained. But if there is only such data, there is not much value that can be tapped, because the physical laws of the earth around the sun have been studied more clearly.

So, what is big data? How is big data generated? What kind of data is the most valuable, and is most suitable as a computer learning object?

According to Martin Hilbert's summary, the big data we often say today is actually after 2000, because of the tremendous growth in the three aspects of information exchange, information storage, and information processing:

Information exchange: It is estimated that from 1986 to 2007, the amount of information that can be exchanged on the earth through existing information channels increased by about 217 times, and the degree of digitization of such information increased from about 20% in 1986. It was about 99.9% in 2007. In the process of explosive growth of digital information, each node participating in the information exchange can receive and store a large amount of data in a short time.

Information storage: Global information storage capacity is doubling every three years. During the 20 years from 1986 to 2007, the global information storage capacity increased by about 120 times, and the degree of digitization of stored information increased from about 1% in 1986 to about 94% in 2007. In 1986, even with all our information carriers and storage methods, we were able to store about 1% of the information exchanged around the world, and in 2007 this number has grown to about 16%. The increase in information storage capacity provides us with near-infinite imagination in the use of big data.

Information Processing: With a huge amount of information acquisition and information storage capabilities, we must also have the ability to organize, process and analyze this information. Companies such as Google and Facebook have also established flexible and powerful distributed data processing clusters while increasing the amount of data.

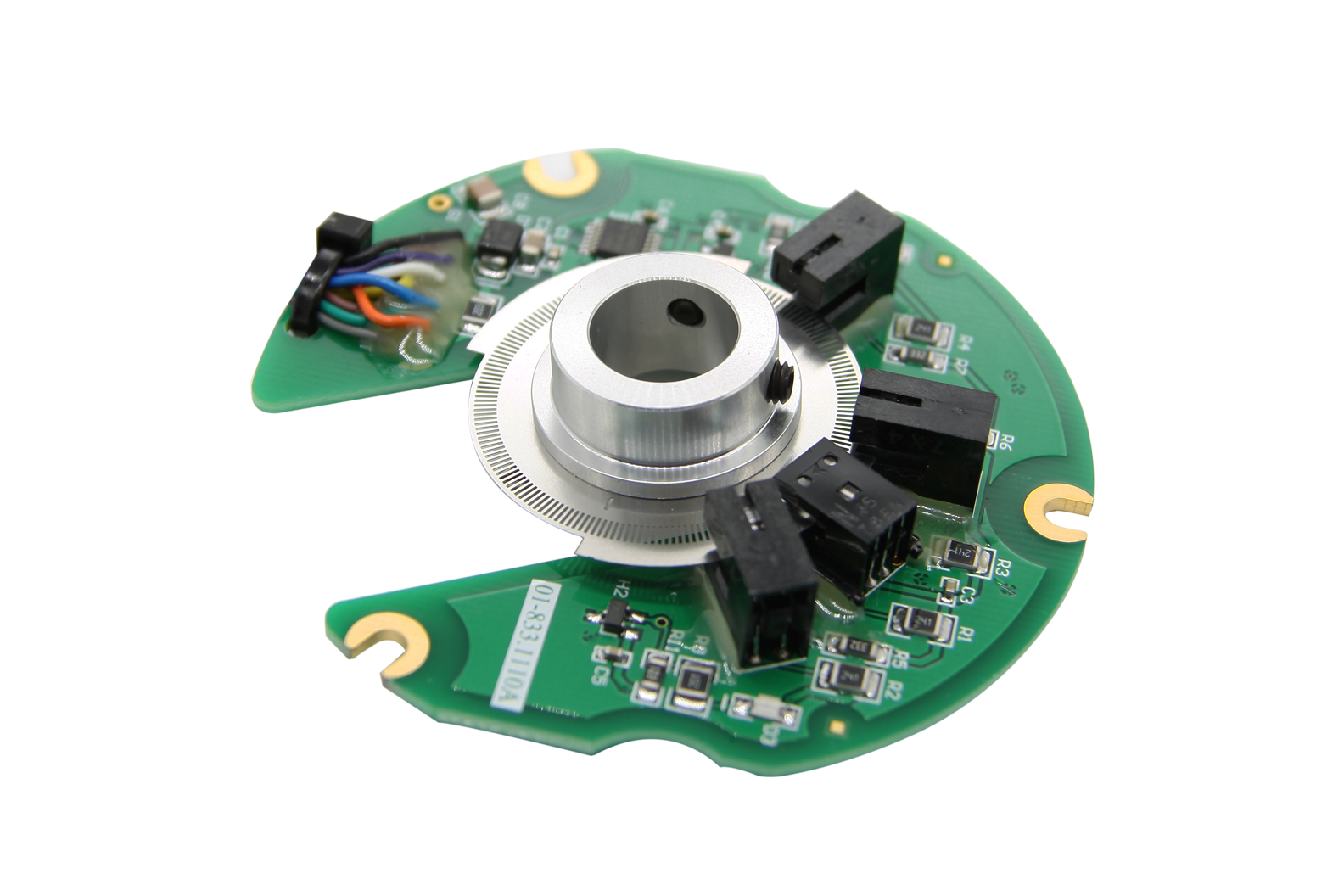

The sensor includes linear encoder and rotary encoder, which is used for the position measurement of speed, displacement and angle. Yuheng optics can provide rotary encoders based on optical, magnetic and gear principles, linear encoders based on optical principles and supporting products.

Custom Sensor,Clintegrity Encoder,Absolute Angle Encoder,Small Rotary Encoders

Yuheng Optics Co., Ltd.(Changchun) , https://www.yhencoder.com