RBF (Radial Basis FuncTIon) can be regarded as a surface fitting (approaching) problem in high-dimensional space. Learning is to find a surface in the multi-dimensional space that can best match the training data, and then a new batch of data. Use the surface just trained to handle (such as classification, regression). The essential idea of ​​RBF is that the backpropagation learning algorithm applies recursive techniques, which are called random approximations in statistics.

The basic funcTIon in RBF (the basis function in the radial basis function) is provided in the implicit unit of the neural network to provide a set of functions that are constructed when the input pattern (vector) is extended to the hidden space. An arbitrary "base". The function in this function set is called the radial basis function.

The difference between non-rbf and nonlinear regression is that rbf does not know the mathematical model, while nonlinear regression knows the mathematical model, such as knowing that the data obeys a certain function distribution, but the coefficients are not known.

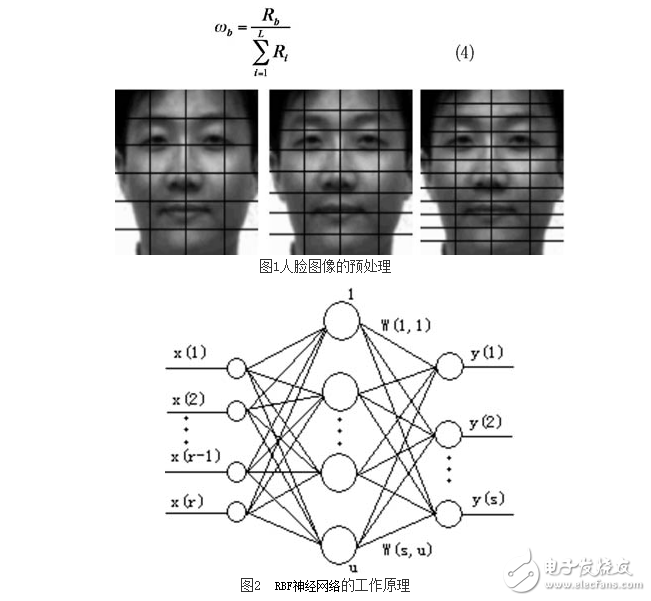

Design of Face Recognition Method Based on RBF Network and Bayesian ClassifierBased on face image segmentation and singular value compression, the design of RBF neural network and Bayesian classifier fusion is carried out. The gray-scale distribution of the face image itself is described as a matrix, and its singular value features have many important properties such as transposition invariance, rotation invariance, displacement invariance, and image invariance. Various algebraic and matrix transformations are performed. Algebraic features are representations of faces. Since the singular value vector of the overall image reflects the statistical characteristics of the whole image, the description of the details is not deep enough. This paper simulates the pattern of human recognition of human faces, and highlights the skeleton of the face to be recognized based on image segmentation and weighting. The feature is similar to the ability of humans to automatically remove the difference in the changing parts of the same face when recognizing a human face.

The Radial Basis Function (RBF) network is a good performance feedforward three-layer neural network with global approximation properties and optimal approximation performance. The training method is fast and easy, and the RBF function also has the biological rationality of local response. The implicit layer nodes of the RBF neural network use a nonlinear transfer function, which has stronger classification ability than the single-layer perceptron network. In the case of determining the center of the hidden layer, the RBF neural network only needs to modify the single-layer weight of the hidden layer to the output layer, which has faster convergence speed than the multi-layer perceptron, which is also chosen as the RBF neural network. The reason for the classifier.

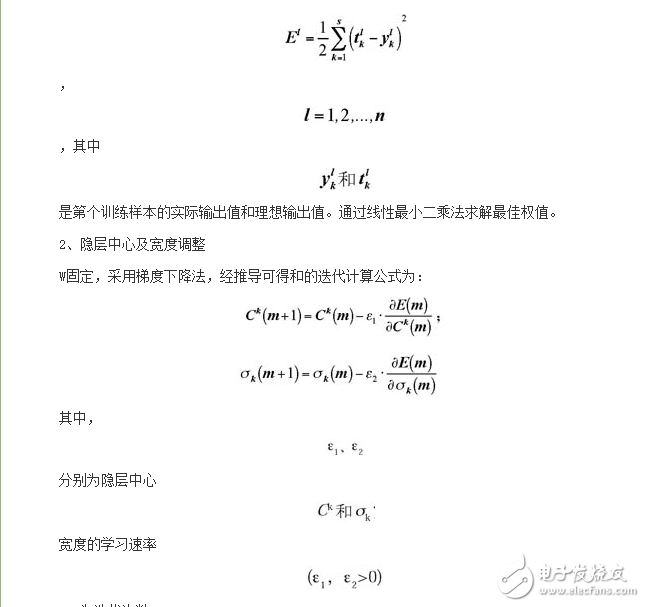

A supervised clustering algorithm is adopted in the construction and initialization of the RBF neural network, and the Hybrid Learning (HLA) algorithm is adopted in the final adjustment and training of the network parameters. Under the condition that the hidden layer parameters are fixed, the connection weight between the hidden layer and the output layer is calculated by the linear least squares method, and the center and width of the hidden layer neurons are adjusted by the gradient descent method. This hybrid learning algorithm enables the RBF network to approximate the optimal structure under the Moody criterion, that is, the smallest network that is consistent with a given sample is the best choice without other prior knowledge. Thereby ensuring that the network has better generalization capabilities.

The Bayesian network is a directed acyclic graph with probabilistic annotations. Each node in the graph represents a random variable. If there is an arc between two nodes in the graph, it means that the two nodes correspond to each other. The random variables are probability dependent, and vice versa, the two random variables are conditionally independent. Any node X in the network has a corresponding Conditional Probability Table (CPT) to indicate the conditional probability of Node X taking its possible values ​​at its parent node. If node X has no parent node, then X's CPT is its prior probability distribution. The structure of the Bayesian network and the CPT of each node define the probability distribution of the variables in the network.

Singular value decomposition SVD

Singular value decomposition is very useful. For matrix A(m*n), there are U(m*m), V(n*n), S(m*n), which satisfies A = U*S*V'. U and V are the singular vectors of A, respectively, and S is the singular value of A. The orthogonal unit eigenvectors of AA' constitute U, the eigenvalues ​​constitute S'S, the orthogonal unit eigenvectors of A'A form V, and the eigenvalues ​​(same as AA') constitute SS'. Therefore, singular value decomposition and eigenvalue problems are closely related.

The singular value decomposition provides some information about A. For example, the number of non-zero singular values ​​(the order of S) and the rank of A are the same. Once the rank r is determined, the front r column of U constitutes the positive of the column vector space of A. Cross-linking.

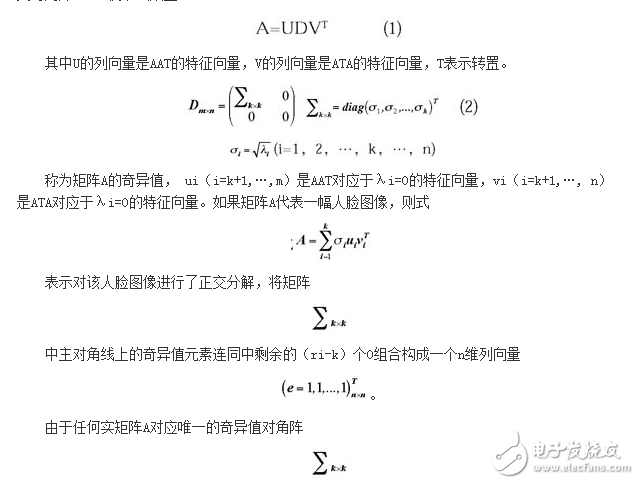

For any matrix A ∈ Rm & TImes; n, it is transformed into a diagonal matrix by singular value decomposition.

Let A∈Rm×n (without loss of generality, let m≥n), and rank(A)=k, then there are two unitary matrices Um×m and Un×n and generalized diagonal matrix Dm×m to make the following formula Established:

Therefore, a face image corresponds to a unique singular value feature vector.

(1) selecting a face from a face database as a recognition training set;

(2) normalizing the face image geometrically selected into the training set; and normalizing the grayscale of the face image selected into the training set;

(3) dividing the preprocessed face image into sub-blocks of size;

(4) Change each image into a column vector (first arrange all the vectors of each sub-block into one column, then arrange all the sub-blocks into one column in order); then proceed in units of sub-blocks;

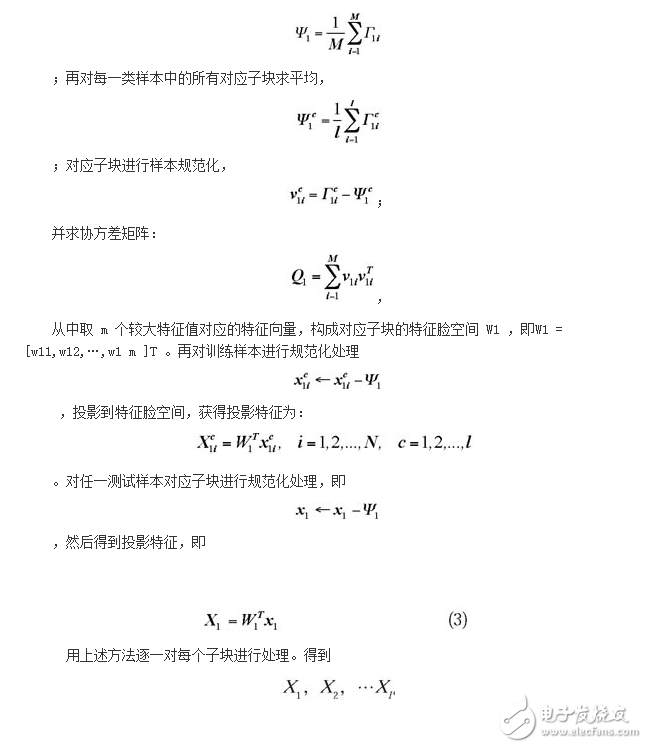

Structural features based on facial bone characteristics, eye distribution, and shape of the nose are the main basis for identifying faces. The matrix formed by each face image is divided into two dimensional matrices, respectively, which are reduced in dimension to one-dimensional column vectors. Find the average of all corresponding sub-blocks in the training set,

.

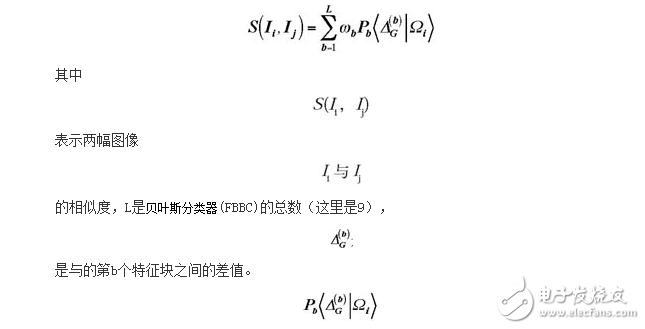

Design of feature-based block Bayesian classifier

Each feature-based block-based Bayesian classifier utilizes the discriminant information contained in the corresponding image block. In order to obtain a better classifier, these classifiers need to be merged to give the final discriminant result. There are several ways to implement classifier fusion, such as weighted summation, multiplication, and so on. This paper takes a weighted summation method:

Is the class conditional probability density calculated by the bth Bayesian classifier. Is the weight corresponding to the bth Bayesian classifier.

The Bayesian classifiers corresponding to different feature blocks contribute differently to the final discriminant result. This paper adopts the method of assigning weights based on the sub-classifier classification accuracy rate: put each sub-classifier back into its training set. Calculate the recognition rate on the training set. Using these recognition rates, calculate the weight of the bth sub-classifier using the following formula:

Figure 2 How the RBF neural network works

RBF neural network design

Directly using the matlab toolbox, this is a relatively simple design method, the toolbox provides several functions can be used, newrb, newrbe, newpnn, newgrnn, earlier versions and solverb. Please refer to the relevant information for the use of these functions and the parameter description. Among them, in order to see the performance function change of each step network, it is recommended to set to 1 when setting the DF parameter. In this way, the map of each step can be displayed, and there is a clear understanding of the process of generating the network. To view the parameters of the designed network, the center uses net.IW{1}, the output layer weights are net.LW{2}, the bias of the hidden layer uses bet.b{1}, and the offset of the output layer is used. Check out net.b{2}. Of course, when modifying: for example, to modify the offset of the output layer, then, is net.b{2}=? ? , you can. The initial center of the network of this method is randomly selected from the input training samples, and the number of centers is gradually increased from small to large. It is recommended that you do not design too small when determining the spread parameters. Too small may affect the identification of test samples. I do the pattern classification myself, but it should be the same for curve fitting.

Using the clustering algorithm to determine the center, there are many clustering algorithms that can be used. The most common one is the K-means clustering algorithm, as well as the nearest neighbor, fuzzy clustering, and support vector basis. These methods are first determined. Then the output layer weight and the offset of the output layer are determined by the lms, rls algorithm, and the like. At design time, the offset can be designed according to your needs, with or without.

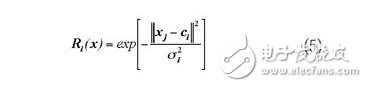

Assuming that ∈(1≤j≤r) is the input layer neuron, which is the center of the i-th neuron of the hidden layer, the output of the j-th neuron at the i-th hidden layer node is: , i =1, 2, ..., u, where |||| represents the Euclidean norm. When RBF selects the Gaussian kernel function, its output is:

Where is the width of the i-th neuron of the hidden layer. The output value of the kth node of the output layer is: where is the connection weight of the hidden layer node k to the jth output node.

Construction and initialization of RBF neural network

The initialization process of RBF neural network hidden layer clustering is as follows [10]:

(1) The number of hidden layer nodes u=s. Assume that each class converges to a cluster center and then adjusts it according to the situation.

(2) The center of the kth neuron of the hidden layer is the mean of the k-type eigenvectors. ,k=1,2,...,u,

(3) Calculate the Euclidean distance from the mean to the farthest point belonging to class k

(4) Calculate the distance from each j cluster center to the k cluster center, j=1, 2,..., s, j≠k

(5) Containment rules: If and, then class k is included in the class, the class should be

RBF neural network algorithm

Network learning is to reduce the output error by adjusting the connection weight, hidden layer center and width.

1, the adjustment of the connection weight

Define the error function as:

, m is the number of iterations.

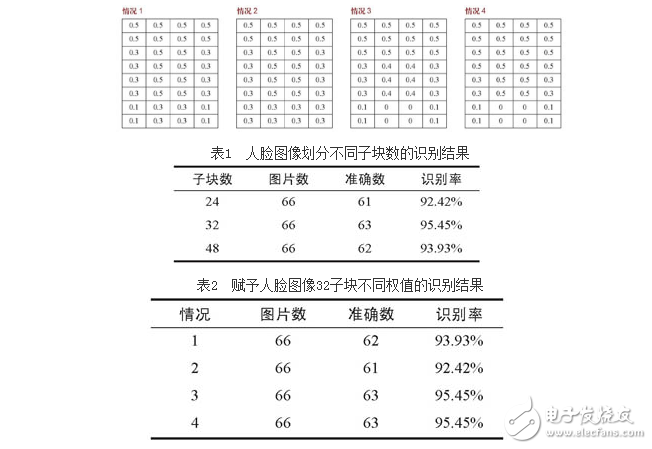

Experimental results and analysis

The experimental face recognition experiment is carried out by using the face image data in the Yale face database, and the singular value vector X1, X2, ..., Xl (the number of training samples in the l) matrix of the face image is weighted and reconstructed. The RBF neural network training is sequentially input, and when the error tolerance or the number of trainings is satisfied, the training is stopped. In the test process, the identification judgment is made according to the method of competitive selection.

This paper focuses on the selection of 32 sub-block weights of face images as follows:

The experimental results show that the facial features based on facial features, eye distribution, nose shape and other structural features are the main basis for identifying faces. Through the reasonable distribution of sub-block weights, the facial bone features are highlighted, and the features of facial expressions such as mouth and skin wrinkles are weakened or eliminated. This is similar to the pattern when humans recognize faces, and the recognition effect is better. However, the sub-blocks should not be too much, otherwise the computational burden of the RBF neural network will increase, and the recognition rate will also decrease.

in conclusion

This paper proposes a face recognition method based on image block singular value compression, fusion RBF neural network and Bayesian classifier to simulate the ability of humans to recognize the difference of the same face when they recognize faces. Face recognition, weight distribution according to the RBF neural network recognition effect, through experiments, the method has achieved good results in both dimensionality reduction and recognition rate, and has a large change in the frontal face (especially the lower jaw). Good recognition accuracy and recognition speed.

Tobacco control has been a common global concern, while the traditional tobacco industry gradually, new tobacco has become the new strategic layout of tobacco giants. In this context, the emergence of e-cigarettes has further led to the replacement of traditional tobacco. At present, there are already a thousand different types of e-cigarettes, which have undergone several stages of development. The e-cigarette we are introducing today is the CBD pod systewm, a new type of e-cigarette. In this article we will combine the characteristics of the CBD with a brief analysis of it.

·Anti-anxiety

According to scientific studies,CBD can help depressed patients reduce their anxiety. The use of CBD can help maintain endogenous cannabinoids at a reasonable level, making the patient feel good and happy physically, and without any dependence.

·Anti-ageing

CBD is very powerful in anti-ageing. As a non-psychoactive component of the cannabis plant CBD inhibits the glutamate toxic response of cortical neurons and suppresses excessive oxidative stress, helping the body to achieve anti-ageing effects.

·Anti-inflammatory

CBD reduces the free radicals that cause neurodegenerative diseases and reduces swelling through its anti-inflammatory effects. In addition, CBD stimulates appetite and relieves pain.

China Disposble Vape Pen,E-Cigarette Cbd Vaporizer,Best Disposable Cbd Vape Pen,Disposable Cbd Vape

Shenzhen MASON VAP Technology Co., Ltd. , https://www.e-cigarettefactory.com