In the field of object detection and identification, the Chinese University of Hong Kong-Chang Tang Science and Technology Joint Laboratory published a paper in CVPR 2018, proposed an object detection algorithm based on the scale-time grid video, to solve how to optimize and balance the accuracy and speed of video object detection problem. This article explains the sixth issue of Shangtang Technology CVPR 2018 paper.

Introduction

This article focuses on how to better optimize and balance the accuracy and speed of object detection in video. In order to achieve high accuracy, object detectors often need to use high-performance convolutional neural networks to extract image features, resulting in the difficulty of real-time detection speed. The key to solving this problem is to find an effective way to balance the accuracy and detection speed. In order to find a good balance, the previous research work usually focused on how to optimize the network structure. This paper proposes a new method to redistribute computing resources based on Scale-Time Lattice (ST-Lattice).

The proposed method achieves 79.6 mAP (20fps) and 79.0 mAP (62 fps) accuracy and speed on the ImageNet VID dataset. The main contributions of this article are:

A scale-time grid is proposed, which provides a rich design space for the algorithm to optimize the object detection performance.

Based on the scale-time grid, a new framework for object detection in video is proposed, achieving a balance between excellent accuracy and fast detection speed.

A number of new technology modules have been designed, including an efficient propagation module and a dynamic key frame selection module.

The basic idea

There is a strong continuity and information redundancy between adjacent frames in the video. In order to improve efficiency, these properties should be used to design a new detection framework. The previous method has already made many explorations of object detection in video, usually including several steps, such as object detection based on a single frame, propagation over time, and correction of spatial position, etc. How to use a more efficient method Combining these separate steps is a question worth studying.

The basic idea presented in this paper is to better allocate computational resources in a computational grid, apply an accurate but slower static image object detector to sparse keyframes, and then use some simple and efficient networks at the time. Both the spatial and the spatial dimensions continuously propagate and correct these test results in order to achieve a better balance.

Scale-time grid

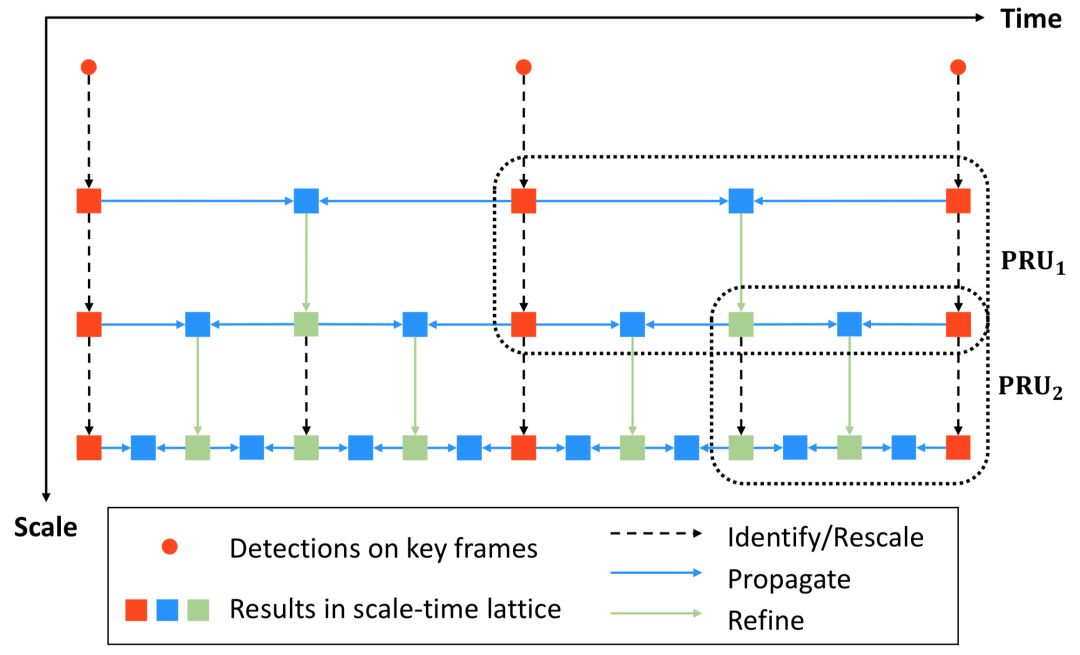

This paper presents a scale-time grid as a directed acyclic graph (shown in Figure 1). Each node in the graph represents the intermediate result of a certain image scale and time point, ie a series of detection frames. These nodes are related in a grid-like manner: chronologically from left to right, the image scale (resolution) increases from top to bottom. One side of the graph represents a specific operation, taking the result of one node as input and outputting the detection result of the other node. We define two operations in the diagram, temporal propagation and spatial refinement. They correspond to the horizontal and vertical edges in the figure. Time propagation is the propagation of the detection frame between adjacent frames at the same image scale. The spatial correction is to correct the position of the detection frame in the same frame to obtain the detection frame results in a higher image size. In the scale-time grid, the detection results are propagated from one node to another through the above operations, and finally reach all the nodes at the bottom, ie, the detection results of each frame at the largest image scale.

figure 1:

Scale-time grid diagram

Based on the scale-time grid, the video object detection algorithm in this paper is divided into the following three steps:

Detect on sparse key frames (using a static image-based object detector) to get results on sparse nodes;

Plan a path from the above sparse nodes to dense nodes;

Based on the above path, the detection result on the key frame is propagated to the intermediate frame, and position correction is performed.

The framework of the scale-time grid provides a rich design space for the algorithm to balance the accuracy and speed of object detection in optimized video. The total time required for the detection is the sum of the times on all sides of the path, including the time for the single-frame object detector and the time used for propagation and correction. You can achieve a desired balance of performance and time by allocating different calculation times to different edges.

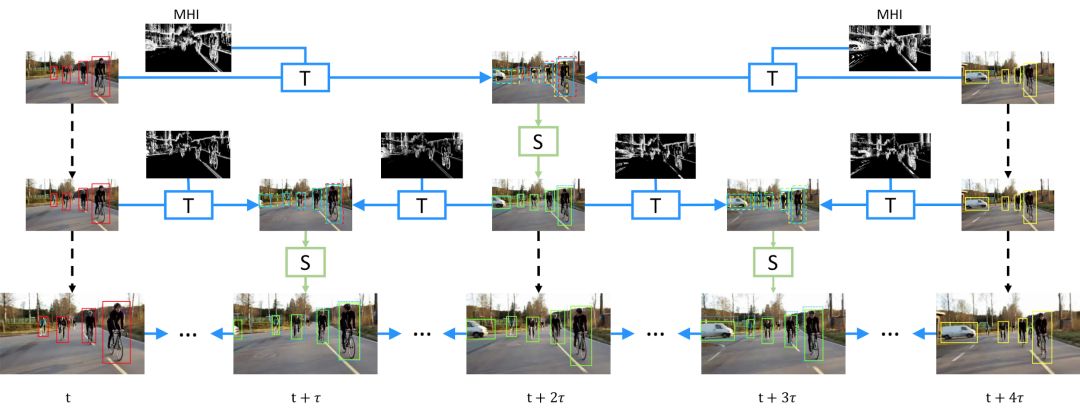

figure 2:

Time-Propagation Network in the Scale-Time Grid (T)

And Space Correction Network (S)

Implementation of different modules

Propagation and Refinement Unit (PRU)

The propagation and correction unit (shown in Figure 2) takes the results of two adjacent keyframes as input, propagates the results to the intermediate frame using the time propagation network, and then uses the spatial correction network to spatially correct the results. The time propagation network is mainly used to consider the motion information in the video to predict the larger displacement between the two frames. The spatial correction module corrects the error of the detection frame and the error caused by the propagation by detecting the deviation of the position of the detection frame. These two operations are iteratively performed to obtain the final test result.

In the time-propagation network, the algorithm uses a motion history image (MHI) between two frames to represent the motion information, input it into the network, and return the displacement of the object during this period of time. Compared with commonly used motion representations such as optical flow, MHI's calculation speed is very fast, making the spatial propagation network able to maintain high efficiency.

In the spatial correction network, the algorithm uses the same structure as Fast R-CNN and uses the RGB image of the current frame as an input to return the deviation of the detection frame. The two small networks are optimized simultaneously by training with a multi-task loss function.

Keyframe selection

The selection of key frames has an important influence on the final detection speed and accuracy. The simplest and straightforward method is to select keyframes evenly on the timeline. Most of the previous methods have adopted this strategy. However, in this paper, the redundancy of information between frames and frames is considered, and not every frame has an equally important position. Therefore, a non-uniform sampling strategy is needed in the time period when objects are moving fast and are difficult to communicate. Select keyframes more than one, otherwise select fewer keyframes.

The specific process is as follows: First, a single frame of object detection is performed on a very sparsely selected very sparse frame (for example, every 24 frames), and then the difficulty of propagation between two adjacent key frames is measured according to the detection result. If the ease is below a certain threshold, an extra keyframe is inserted between the two frames. When calculating the degree of difficulty, this article considers two factors, namely the size of the box and how fast the object moves. Refer to the original formula for the specific formula.

Time Pipe Rescoring

Since the detection frame is propagated in time, the obtained detection result is not an independent frame-by-frame result, but is naturally connected in series into an object pipe (Object Tube), and then these object time pipes can be reclassified. This article trains an R-CNN as a classifier. For each object time pipeline, K frames are selected as input uniformly, and their average value is used as a new classification result. According to the new classification results, each object in the time pipeline is adjusted. The score of the box.

Experimental results

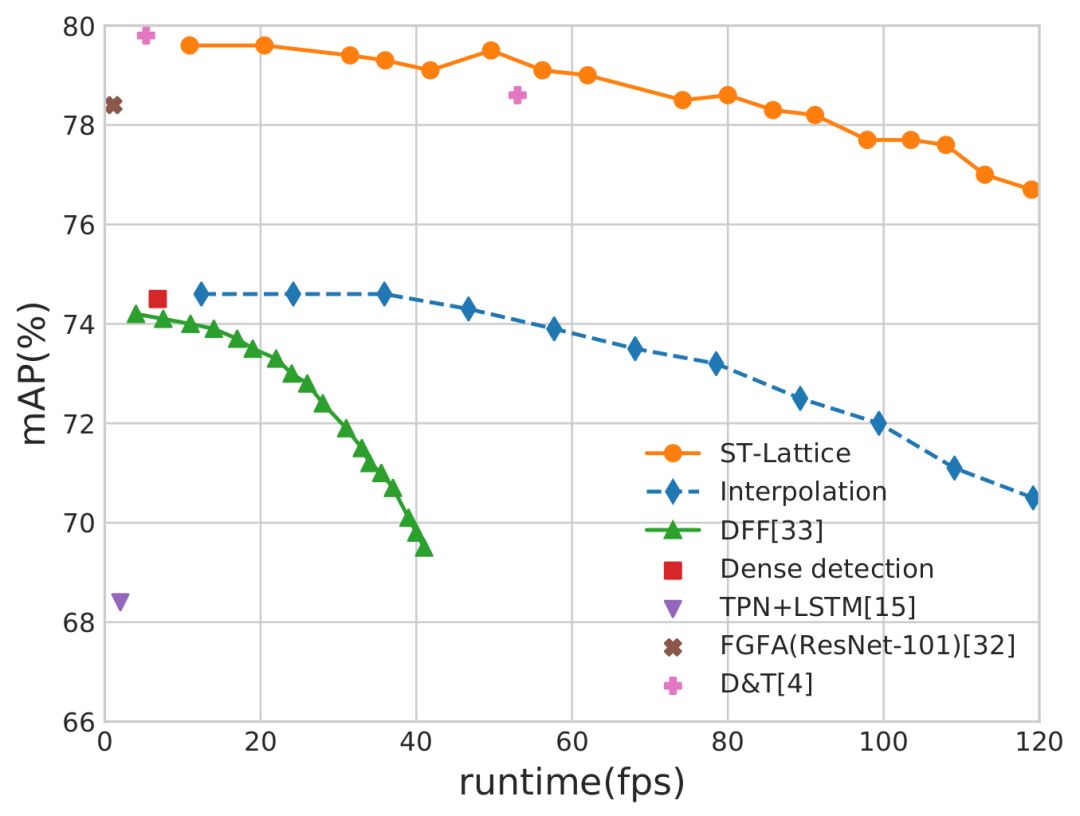

Figure 3 shows the curve of detection speed (fps) and accuracy (mAP) based on the scale-time grid algorithm and is compared with the previous method. You can see that this method is better than baseline and previous performance advanced methods.

image 3:

Object detection algorithm in different videos

Comparison of inspection speed and accuracy

in conclusion

For object detection in video, this paper proposes a flexible framework of the scale-time grid, which provides a rich design space to solve the challenge of how to balance the accuracy and detection speed. This method combines single-frame detection, time propagation, and multi-scale spatial processing to solve this problem. The experimental results show a variety of designs and configurations based on the framework, which can achieve an accuracy similar to the current advanced performance method, but the detection speed has been greatly improved. This framework can be used not only for object detection but also for other video-related tasks such as object segmentation, object tracking, and so on.

Automotive Toggle Switches

Automotive Toggle Switches, namely Electrical Toggle Switches. Our Automotive Switches could divided into Push Button Switches, Automotive Toggle Switches, Automotive Rocker Switches, Automotive Rotary Switches and Automotive Battery Switches .

Yeswitch has been designing and manufacturing Momentary Toggle Switches for nearly 30 years and has accumulated rich experience in this field. Yueswitch people continue to innovate and constantly overcome technical problems, providing professional automotive control panel toggle switches for global automakers and enthusiasts in the global automotive modification industry. The types of our toggle switch products are complete and diversified to meet the needs of customers.

Yeswitch Waterproof Toggle Switches is widely used in the automotive field, ship, medical, communication, and has IP6 standard. Our company's full range of toggle switches not only have reliable quality assurance, but also can be diversified in appearance. Different types of crank handles can be selected with LED light design, which is convenient for setting and preventing desperation.

Automotive Toggle Switches,Automotive Toggle Switch,Electrical Toggle Switches,Toggle Switch Function

YESWITCH ELECTRONICS CO., LTD. , https://www.yeswitches.com