This section compares five open source deep learning frameworks, focusing on three dimensions: hardware support rate, speed and accuracy, and community activity. They are: TensorFlow, Caffe, Keras, Torch, DL4j.

2.3.1 Hardware Support Rate

The hardware utilization studied in this section refers to the hardware support efficiency and general performance performance of different open source deep learning frameworks for different CPU/GPU configurations.

Table 2.1 shows the general support performance of each framework for different hardware.

2.3.2 Speed ​​and accuracy

In this section, the gradient calculation time, feedforward propagation, and feedback propagation time sum metrics are not subdivided. And all test data is based on the CPU.

modelThis section selects Fully Connected Neural Network (FCNN) as the depth learning framework speed test model. FCNN is regarded as a feedforward multi-layered perception network, meaning that the connections between network neurons are unidirectional and do not contain ring connections, so time data is easily obtained. The main purpose of FCNN is to perform data classification, so it is suitable for comparing the accuracy under different frameworks.

data setThis section selects the MNIST handwritten digital image set as the FCNN data set to test different frameworks. The MNIST data set consists of 6000 training image sets and 1000 test image sets, all of which are handwritten digital pictures of 28×28 pixels.

Test MethodsThe goal of this section is to compare the time it takes for the FCNN-type neural network to converge on different frameworks and the accuracy of the pre-training network for prediction of classification results on different frameworks. The main aspects are as follows: 1. convergence speed; 2. prediction time consumption; 3. classification accuracy; 4. source code size;

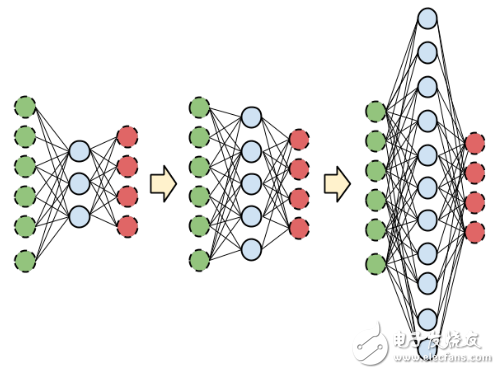

In order to evaluate the scalability of the model, different scalability factors are used to measure the above 1-3 points. The neural network structure is tested at two scales: 1. Use the same number of neurons to change the "depth" of the network (see Figure 2.10); 2. Use the same number of layers to change the "width" of the network (see Figure 2.11) ;

Figure 2.9 Neural network with "depth" changed

Figure 2.10 Neural network with "width" changed

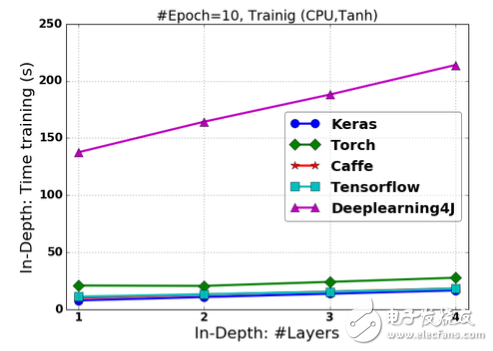

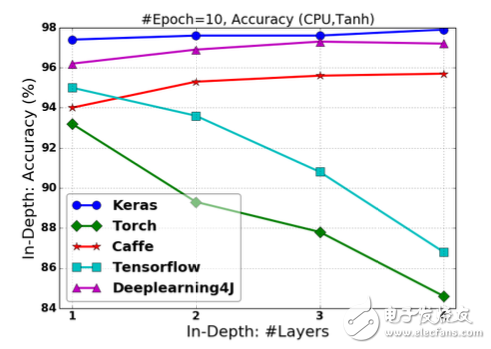

Test ResultsFigure 2.11–2.14 show the training time, prediction time, and classification accuracy of FCNN based on the use of Tanh nonlinear activation functions for each framework. Epoch was set to 10 for all tests.

Figure 2.11 Training time based on Tanh-activated FCNN in changing "depth"

Figure 2.12 Prediction time based on Tanh-activated FCNN in changing "depth"

Figure 2.13 Classification accuracy of FCNN based on Tanh activation under changing “depthâ€

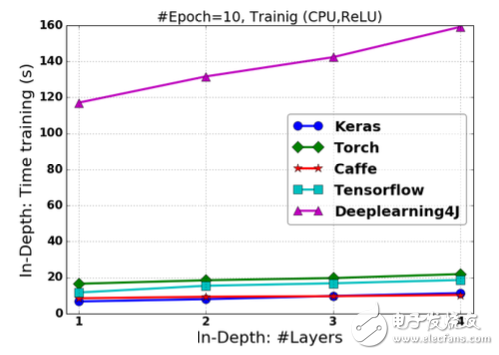

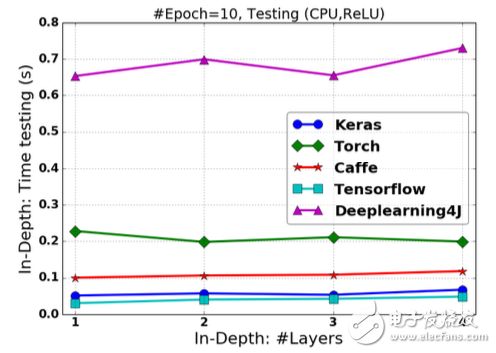

Similarly, Figure 2.14 - Figure 2.16 shows the training time for FCNN based on the use of the ReLU non-linear activation function for each framework.

Figure 2.14 Training time of FCNN based on ReLU activation under changing “depthâ€

Figure 2.15 Prediction time of FCNN based on ReLU activation in changing "depth"

Cable Pulling Mesh Socks of various types including cable,conductor and OPGW Pulling Mesh Socks,Cable Pulling Sock,Mesh Sock Joint,Cable Net Sheath Connector,Cable Net Sheath Socks,Pulling Sock Grips,Pulling Socks,OPGW Socks,which is widely used to used to connect and hold tight the conductors,it can get through every kind of Stringing Pulley while stringing electric power lines.It is made of high quality and high strength steel wire with reasonable volume,light weight,easy to operate.By high quality material and good design,these kind of Pulling Mesh Socks can be durable and long service life.we are a professional Chinese exporter of Cable Pulling Mesh Socks and we are looking forward to your cooperation.

cable socks, pulling socks, sock joint, mesh stockings, cable pulling socks

Yangzhou Qianyuan Electric Equipment Manufacturing & Trade Co.Ltd , https://www.qypowerline.com