Digital image processing technology is an interdisciplinary field. With the continuous development of computer science and technology, image processing and analysis have gradually formed their own scientific system, and new treatment methods emerge one after another. Although their development history is not long, it has aroused widespread concern from various parties. First of all, vision is the most important means of human perception, and images are the basis of vision. Therefore, digital images have become an effective tool for scholars in many fields such as psychology, physiology, and computer science to study visual perception. Second, image processing has growing demand in large-scale applications such as military, remote sensing, and meteorology.

Image segmentation is the technique and process of dividing an image into specific regions with unique properties and proposing objects of interest. It is a key step from image processing to image analysis. The existing image segmentation methods are mainly divided into the following categories: threshold-based segmentation methods, region-based segmentation methods, edge-based segmentation methods, and segmentation methods based on specific theories. Since 1998, researchers have continuously improved the original image segmentation methods and applied some new theories and methods of other disciplines to image segmentation, and proposed a number of new segmentation methods. The objects extracted after image segmentation can be used in the fields of image semantic recognition, image search, and the like.

What are the methods of image segmentation?1 Region-based image segmentation

The histogram threshold method, region growing method, image-based random field model method and relaxed marker region segmentation method commonly used in image segmentation are all region-based methods.

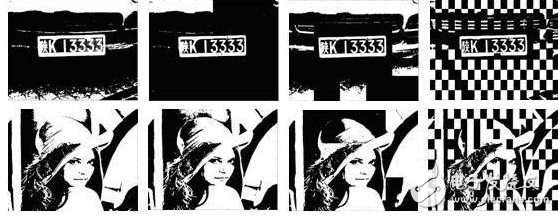

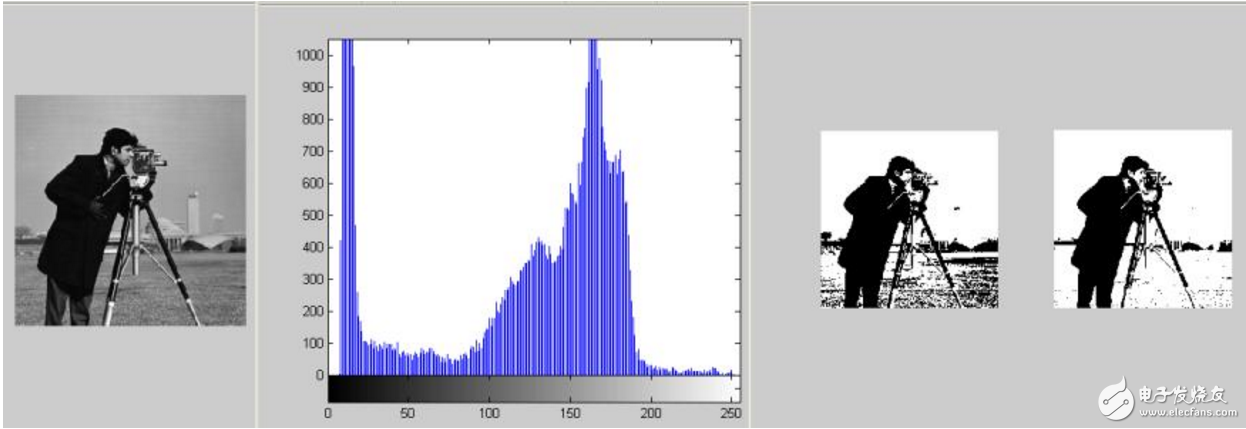

(1) Histogram threshold segmentation is to divide the gray histogram (one-dimensional or multi-dimensional) of an image into several classes with one or several thresholds under certain criteria, and consider the gray value in the image to be the same. Pixels within a grayscale class belong to the same object. The criteria that can be used include the bottom of the histogram, the smallest intra-class variance (or the maximum inter-class variance), the maximum entropy (which can be used in various forms of entropy), and the minimum error rate. , the moment is constant, the maximum busyness (defined by the symbiotic matrix), and so on. The defect of the threshold method is that it only considers the gray information of the image, and ignores the spatial information in the image. There is no obvious gray level difference in the image or the image segmentation problem with large overlapping of the gray value range of each object. It is difficult to get accurate results.

(2) Regional growth is an ancient method of image segmentation. The earliest method of region growing image segmentation was proposed by Levine et al. There are generally two ways to do this. One is to first give a small block or a seed area in the target object to be segmented in the image, and then continuously add the pixels around it to the rule based on the seed area. To achieve the purpose of eventually combining all the pixels of the object into one region; the other is to first divide the image into a lot of consistency, such as a small area with the same gray value in the region, and then press a certain The rule merges small areas into large areas to achieve the purpose of segmenting images. The typical area growing method is the area growing method based on the facet model proposed by TC Pong et al. The inherent disadvantage of the area growing method is that it often causes excessive Splitting divides the image into too many areas.

(3) The image-based random field model method mainly uses the Markov random field as an image model, and assumes that the random field conforms to the Gibbs distribution. The problems of image segmentation using the MRF model include: the definition of the neighborhood system; the selection of the energy function and the estimation of its parameters; and the strategy of minimizing the energy function to obtain the maximum a posteriori probability. Neighbor systems are generally defined in advance and are therefore primarily the latter two issues. S. Geman, for the first time, used the Markov random field model based on Gibbs distribution for image processing, discussed in detail the various problems of the MRF model's neighborhood system, energy function, Gibbs sampling method, etc., and proposed to use simulated annealing algorithm to minimize energy. The method of function, and the proof of the convergence of simulated annealing algorithm is given. The application example of MRF model in image restoration is also given. On this basis, a large number of image segmentation algorithms based on MRF model are proposed.

(4) Labeling means that several areas into which an image is to be divided are represented by a different label, and each of the pixels in the image is assigned a certain one of these marks in a certain manner. Marking the same connected pixels constitutes the area represented by the mark. The marking method often uses the relaxation technique to assign a mark to each pixel in the image, which can be generally divided into three types: discrete relaxation, probability relaxation, and fuzzy relaxation. Smith et al. first used the slack marking technique for image segmentation. Later, a large number of image slack segmentation algorithms were proposed. In addition, the slack mark can be used not only for image segmentation but also for edge detection, target recognition, and the like.

2 Edge-based image segmentation

The edge-based segmentation method is closely related to the edge detection theory. Most of these methods are based on local information. Generally, the zero-point information of the maximum value of the image-order derivative or the second-order derivative is used to provide the basic basis for judging the edge point. Further, various curve fitting techniques can be used to obtain a continuous curve dividing the boundaries of different regions. According to the different ways of detecting edges, edge detection methods can be roughly divided into the following categories: methods based on local image functions, image filtering methods, methods based on reaction-diffusion equations, methods based on boundary curve fitting, and activity wheels. Cascade (acTIve contour) method.

(1) The basic idea based on the local image function method is to regard the gray scale as height, use a surface to fit the data in a small window, and then determine the edge point according to the surface.

(2) The image filtering method is based on the theory that the convolution result of the filter operator and the image is derived, which is equivalent to convolution with the image using the same derivative of the operator. Thus, as long as the first or second derivative of the operator is given in advance, the image smoothing filtering and the first or second derivative of the smoothed image can be completed in one step. Therefore, the core problem of this method is the design problem of the filter.

The commonly used filters are mainly the first-order and second-order numbers of Gaussian functions. Canny thinks that the first derivative of the Gaussian function is the better-like approximation of the optimal filter he obtained. Generally, the Laplacian operator is used to find the Gaussian function. The second derivative obtains the LOG (Laplacian of Gaussian) filter operator, which was first proposed by the founder of computer vision, Marr. Filters studied in recent years include controllable filters (steerable), B-spline filters, and the like.

The problem is that the image filtering method is based on finding the maximum value of the first derivative or the zero-crossing of the second derivative of the smoothed image to determine the edge. The problem that must be encountered is that the first-order maximum or Is the pixel corresponding to the zero crossing of the second derivative really the edge point?

(3) The method based on the reaction-diffusion equation is derived from the multi-scale filtering of the Gaussian kernel function in the traditional sense. Due to my limited reading literature, I don't have much to introduce here.

(4) The method based on the boundary curve fitting uses the plane curve to represent the image boundary line between different regions, trying to find the curve that can correctly represent the boundary according to the information such as the image gradient, so as to obtain the purpose of image segmentation, and because it directly gives Out of the boundary curve and not as the general method to find discrete, uncorrelated edge points, it is very helpful for high-level processing such as subsequent processing of image segmentation such as object recognition. Even with edge points found in the usual way, it is often an effective way to describe them with curves to facilitate high-level processing.

In the literature, LH Staib et al. presented a method for describing curves using the Fourier parameter model. According to the Bayes theorem, an objective function is given according to the principle of maximal posterior probability. By maximizing the objective function Determine the Fourier coefficient. In practical application, according to the experience of segmentation of similar images, an initial curve is given. Then, in the specific segmentation example, the parameters of the initial curve are changed according to the image optimization objective function, and the image data is fitted to obtain the specific data determined by the image data. curve. This method is more suitable for segmentation of medical images. In addition to using the Fourier model to describe the curve, some other curve description methods have been studied in recent years. For example, A. Goshtasby describes in detail the method of designing and fitting two-dimensional and three-dimensional shapes using rational Gaussian curves and surfaces. A method of curve smoothing is given by R. Legault et al. MF Wu et al. present a bivariate three-dimensional Fourier descriptor to describe three-dimensional surfaces.

(5) The active contour (also known as the Snake model) is a deformable model (or elastic model) originally proposed by Kass et al. The edge detection of the active contour method considers that the contour of each area in the image should be a smooth curve. The energy of each contour line is composed of internal energy and external energy (including image energy and control energy), wherein the internal energy characterizes the smoothness of the contour. Constraint, the image energy is determined by the gray level, gradient and radius of curvature of the corresponding point on the contour line (if the point is a corner point), and the control energy represents the attraction or repulsion of the fixed point on the image plane. . Using the variational method to solve the minimum value of the energy function, the contour corresponding to the region boundary can be obtained.

Single-Element Detector,Hortwave Detector Unit,Swir Infrared Detector,Swir Ingaas Unit Detector

Ningbo NaXin Perception Intelligent Technology CO., Ltd. , https://www.nicswir.com